Reddit has banned the communities responsible for a rash of computer-generated fake celebrity porn videos, and tightened its rules against “involuntary pornography.”

The subreddit r/deepfakes recently came under scrutiny after redditors began using the forum to make and distribute computer-generated videos featuring celebrities’ faces mapped onto the bodies of porn stars in actual porn videos. On February 7, four months after the eerie videos first appeared on the site, Reddit permanently banned the forum for violating its content policy. Reddit’s response followed earlier bans of the videos, collectively known as “deepfakes,” by Discord, Gfycat, and Pornhub.

In addition, Reddit also banned the longstanding r/CelebFakes community, which had served as a forum for users to post photo manipulations of celebrities in sexualized positions. CelebFakes had existed on Reddit since 2011, and the deepfakes videos originated there in late September 2017 before moving to their own dedicated subreddit. In the two months since the forum was created, r/deepfakes gained 25,000 members and jump-started a public conversation about the terrifying potential of algorithm-generated fake videos.

Related

Why Reddit’s face-swapping celebrity porn craze is a harbinger of dystopia

In implementing its ban, Reddit updated its sitewide rules regarding its ban on “involuntary pornography” and “sexual or suggestive content involving minors.” The ban on involuntary pornography includes revenge porn and the spread of private nudes, and extends to any sexualized image “apparently created or posted without [the subject’s] permission, including depictions that have been faked.” In 2014, Reddit attained notoriety as the distribution center of a cache of leaked celebrity nude photos and has since increasingly — if at times reluctantly — tightened its rules regarding illicit material.

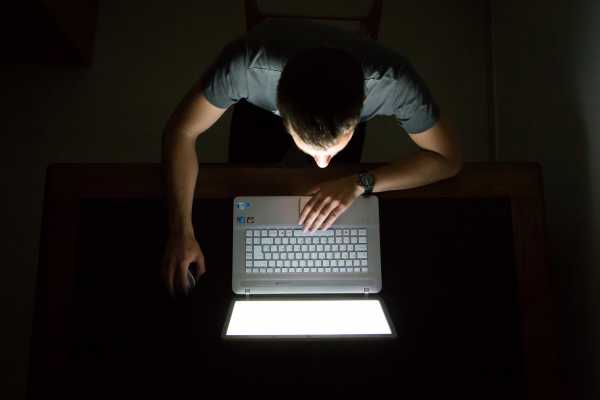

Proponents of deepfakes argued that the technology was already available and free for anyone to use. For example, facial recognition search engines for porn stars already exist, and academic researchers have been proliferating advances in machine learning and facial recognition such as this for years — as with this 2016 project that allowed researchers to manipulate video in real time using face-capture modification technology. This body of research has already demonstrated that it’s not that big a leap from manipulating images of porn stars and celebrities to manipulating those of politicians and other public figures — and in fact, Reddit users immediately started to apply the technology to face-swapping Donald Trump.

What many find alarming about the deepfake tool is its potential for the production of “fake news” on an unprecedented level. “It’s destabilizing,” professor Deborah Johnson told Vice in reaction to the rapid rise of r/deepfakes. “The whole business of trust and reliability is undermined by this stuff.”

So it’s promising that Reddit and other social media platforms are moving decisively to stamp out the proliferation of involuntary celebrity deepfakes. It may be impossible to stem the tide of involuntary pornography now that the technology has been widely distributed — and may be even harder to stop other users of the tool from generating even weirder fake videos. But Reddit’s ban is an acknowledgment that at their core, deepfakes are invasive and nonconsensual violations of the people whose faces and bodies they’re using. These bans won’t totally stop the problem — but perhaps they can delay our descent into a reality-morphing nightmare for just a bit longer.

Sourse: vox.com

0.00 (0%) 0 votes